Why ChatGPT fails to interact like a human

A simple explanation of how ChatGPT works compared to human cognition

In previous posts, I have described the logic of the communication games people play, and the layers of strategic considerations hidden in our mundane discussions. In this post, I discuss how this understanding of human communication helps us see the inherent limitations of large language models like ChatGPT. This post is fairly long, but it can serve as a self-contained explainer of what LLMs are and how they compare with humans, without the need for prior technical knowledge.

The breakthrough of ChatGPT-3 in November 2022 took the world by storm. The uncanny ability of the program to engage in human-like conversations was strikingly different from the frustrating limitations of previous chatbots. People started to wonder whether AI was already surpassing human intelligence as ChatGPT successfully passed difficult exams like the bar exams, the CFA exam, and the SAT.

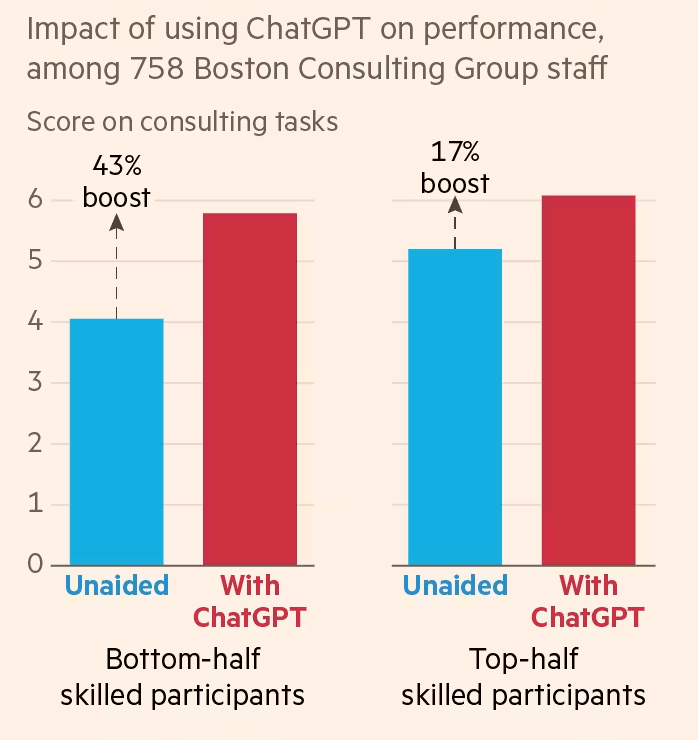

These skills can be put to good use. In a recent post, Sam Atis pointed out the ability of ChatGPT to enhance human performance.

In particular, ChatGPT makes a lot of technical tasks, like writing computer code, easy. As Arnold Kling points out the ability of ChatGPT to understand human requests written in natural language to write computer code is likely to drastically change the experience of programming. Overall, ChatGPT can extend the technical ability of a large proportion of the workforce and it is an impressive achievement.

However, at the same time, ChatGPT has been criticised for having hallucinations and, as the hype receded, many people pointed out that it was not as intelligent as it looked. In this post, I discuss how our understanding of human communication helps us see why large language models fail to behave fully in a human-like way.

How human communication works

In previous posts, I have explained how humans communicate. What we do quickly and without difficulty is actually a complex endeavour. A communication interaction is fundamentally cooperative: people exchange useful bits of information about themselves, others, and the outside world. The way we select what we say, our utterances, is also based on cooperative principles. Sperber and Wilson (1986) have argued, for instance, that we try to be as relevant as possible when discussing with each other by making utterances that are the most informative as possible and the least costly to process. Our whole communication is based on this understanding. We expect that others will make relevant utterances and we know that they are aware of our expectations. This common understanding allows people to communicate with utterances that make sense because of the context shared by the interlocutors.

Our production of utterances and their interpretation is embedded in a web of inferences: the conclusions we draw from the limited evidence contained in the verbal content we exchange. When Mary says something to Paul, he makes sense of what she says because of his expectation that Mary knows what Paul knows—including what Paul knows about what Mary knows—and that she will say something whose interpretation is the most informative in that context. Here are some examples.

Inferences about the speakers’ intentions:

Paul: Do you want to go to the cinema

Mary: I am tired

This seemingly trivial interaction is actually more complex than it seems as Mary is not literally answering Paul’s suggestion. Paul understands Mary because he expects her to say something relevant to his suggestion. Being tired is a reason not to go out. Paul knows that Mary expects he will interpret what she says as relevant to the situation. Paul can therefore interpret Mary’s statement as reflecting her intention to let him know that she doesn’t want to go to the cinema and wants to give him the reason why.

Inferences about the physical world:

Paul: Did you hear that John walked into a glass window

Mary: I am always worried about these, they can be so treacherous

Mary’s answer does not specify the reason why glass windows are treacherous. Paul can nonetheless easily make sense of her statement because he knows that both he and Mary share a basic understanding of human vision and light reflection on glass. Paul can therefore be confident that Mary interpreted Paul’s statement as indicating that John walked into a glass window because he did not see it. Her answer builds on this understanding and suggests glass windows are perilous because it is easy not to see them.

Inferences about social norms:

Paul: Sandy was asked to show her ID at the entrance of the bar

Mary: Lucky her!

Paul can understand Mary’s ironic remark because of a shared understanding that IDs are requested at bar entrances when one looks young and that looking young can be seen as flattering by women who are not very young anymore. Paul can therefore understand that Mary is not making a statement about Sandy being lucky to have her ID checked per se, but that she is lucky to be perceived as young enough to require showing her ID.

Our daily communications are articulated around such inferences involving mind reading, and the use of our knowledge of the shared context with our interlocutor, including the shared memories of past discussions and interactions. It is an incredible cognitive feat achieved with a brain working on 20 Watts of energy. These inferences shape and tame our language. They shape it because they determine what we say, they tame it because they prevent us from saying nonsensible things. These inferences can be seen as reflecting our underlying mental representations of the world with its causal relations and our memory of past events.1 In short: how we remember and understand the world.

How ChatGPT works

ChatGPT is a large language model (LLM) like Claude and LLama. LLMs work in what may seem surprising at first sight. What they do is simply predict the best word to add to previous words.2 Doing so sequentially, they build progressively whole sentences and paragraphs of text. Somewhat surprisingly, given their effectiveness, the general principles of LLMs’ inner workings are relatively easy to understand with an introductory-level knowledge of matrices and linear algebra.

In this section, I provide a non-technical explanation of how LLMs work. For more details about LLMs, Sean Trott and Timothy B Lee wrote a great explainer here. It can be complemented with the free and accessible book written by Stephen Wolfram (2023) on ChatGPT; Grant Sanderson’s great video explaining how they work; and Jay Alamar’s excellent page explaining LLMs with visual illustrations.

To start with LLMs are trained on a gigantic amount of textual data. For ChatGPT, it represents a total of around 5 trillion words. LLMs use this data to learn existing connections between words. Formally, after their training, they place each word in a virtual space and words whose meaning is closer to other words are closer in that space. The illustration below represents this idea with a 3D space. In LLMs, this space has many (many!) more dimensions and can’t be represented graphically.

A difficulty with words is that they can have different meanings depending on context. For instance “model” can refer to a mathematical model or to a fashion model. The innovation of LLMs is their use of a procedure to give a specific meaning to each word using its context. This procedure is called a Transformer.3 It identifies the other words in the text that are the most relevant to give meaning to a given word.

For each word, a Transformer gives weight to previous words as a function of their relevance to the current word being considered. In the illustration below, “it” is identified to be connected with “a” and “robot”. The model’s training on existing textual data and on relationships between words is also used to generate these weights. These weights are considered to focus the “attention” of the LLM when considering the meaning of a given word.

LLMs use many transformers to identify the different ways context influences the meaning of the different words. The effect of running a sentence through layers of transformers may be to give LLMs a kind of understanding of the meaning of how the different words relate to each other in the text considered.

The LLM then uses this information about the meaning of each word of a text, given their context, to produce likely candidates for the next word. One candidate word is then selected randomly. To have some “creativity”, the LLM does not always pick the most likely word but uses the words’ score to form a probability to sample them. The picture below gives an illustration of the candidate words with probabilities to be selected for being the next word in the sentence.

What chatGPT produces

What it means, in practice, is that ChatGPT and other LLMs generate text based on the regularities observed in human language.

It’s just saying things that “sounds right” based on what things “sounded like” in its training material […] ChatGPT is “merely” pulling out some “coherent thread of text” from the “statistics conventional wisdom” that it’s accumulated. - Wolfram (2023)

What LLMs are missing is the ability to reason like humans, outside of the production of speech. They are unable to use the type of non-linguistic inferences made by Paul and Mary to “read the situation”. However, even if LLMs are not reasoning properly as humans do, they are leveraging massive linguistic data generated by humans who were able to reason to decide what to say.4 As Henry Farrell puts it, an LLM “is a by-product of collective agency, without itself being an agent.” LLMs can therefore look like they are reasoning because they are piggybacking on the work of humans who have embedded their way of thinking into the texts they have written.

For instance, if you train a parrot to speak, when you ask “who are you” it may say “I am Joe the parrot” instead of launching into nonsensical utterances. However, it does not do so because it is smart and thinks that giving one’s name is the right way to answer a question about one's identity. The parrot answers with a relevant and grammatically correct sentence because it was trained by humans. It is the same thing with LLMs, they have famously been described as stochastic parrots (programmes that generate language randomly in a parrot-like way).5 Parrots can seem uncannily able to speak like humans, but they should not fool us about their ability to think like humans.

Nonetheless, leveraging a huge amount of human data, LLMs are at first sight fairly impressive. They are able to give technical answers to generic questions better than most people. They can give you answers to scientific, legal or historical questions. Moreover, to some extent, LLMs behave as if they can understand what people think when commenting on a situation where different people interact.6

LLMs’ limitations

Despite these achievements, LLMs still have glaring limitations.

Inability to solve simple problems

Rohit Krishnan has a recent piece on the inability of LLMs to solve simple problems. Consider the simple question: “Name three famous people who all share the exact same birth date and year.”7 ChatGPT is usually unable to provide correct answers.

ChatGPT is also unable to solve problems that small children can often solve, like playing Wordle, Sudoku or crosswords. Solving these problems requires using rules of logic and memory, which is something that a Transformer seems to struggle with, even at a simple level.

It is common in behavioural economics to discuss the idea of human cognition as being based on a System 1 (intuitive and fast) and a System 2 (rational and slow).8 I tend to find this distinction a bit exaggerated.9 However, let’s use it here for convenience. We think of System 1 as our intuitions that reflect our learned experience. These intuitions help us recognise patterns in what we observe and point to good responses to these. They are fast, but may not be suitable when we face novel and unusual problems. In such situations, we can supplement our intuitions with step-by-step thinking processes. A good analogy seems to be that LLMs’ architecture generates a decision-making process more akin to System 1 than to System 2. It recognises patterns and finds intuitively the best way to generate utterances, but it does not have the ability to engage in formal computations or logic to find solutions in novel situations.

It might be best to say that LLMs demonstrate incredible intuition but limited intelligence. It can answer almost any question that can be answered in one intuitive pass. And given sufficient training data and enough iterations, it can work up to a facsimile of reasoned intelligence. - Rohit Krishnan

Underperforming in mathematics

Compared to humans, computers are extremely good at computation. In comparison, LLMs are not that good at it. In their results at exams, ChatGPT models seem to struggle the most in mathematics. Because ChatGPT knows a lot of facts, you can ask it for information such as existing theorems and general procedures. But when asked to solve actual problems it will often produce, very confidently, answers riddled with mistakes. Reviewing ChatGPT’s mathematical ability, this is what Frieder et al. (2024) concluded:

ChatGPT and GPT-4 can be used most successfully as mathematical assistants for querying facts, acting as mathematical search engines and knowledge base interfaces. GPT-4 can additionally be used for undergraduate-level mathematics but fails on graduate-level difficulty. Contrary to many positive reports in the media about GPT-4 and ChatGPT's exam-solving abilities (a potential case of selection bias), their overall mathematical performance is well below the level of a graduate student. - Frieder et al. (2024)

Human-like inferences

Unlike humans, LLMs do not have an in-built capacity to make novel inferences outside of the textual context they face. That being said, LLMs are trained on text generated by humans. Since human reasoning ability may be reflected in these texts, it is possible that LLMs may learn to reproduce human-like reasoning abilities and even apply such an ability in novel contexts.10 Indeed, the performance of LLMs suggests that something of this sort is happening.

However, human reasoning is only imperfectly retrievable from the text itself (a text conveys only partially the thoughts that generated it). For frequent enough types of verbal exchanges, LLMs may have been trained on enough output of human reasoning to generate human-like interactions. But in a rather novel situation, LLMs' training may be too limited for them to have extracted general principles to be applied in that particular situation.

A challenge faced by LLMs is that the number of meaningful texts that can be written is unimaginably large. Even with the vast amounts of data used to train LLMs, there will always be unique situations and context-specific nuances that are not well-represented in the training data. LLMs may struggle to make context-specific inferences in rare contexts not encountered in their training data.

Cognitive scientist Sean Trott provided examples of situations where LLMs perform significantly worse than humans at understanding utterances when it requires making not entirely trivial inferences about what is meant. One type of situation where they struggle seems to be when they have first to infer what somebody knows, then use this knowledge to interpret what somebody means. Consider the following scenario:

It is reasonably easy for a human to appreciate that since both you and Jonathan saw the blinking light and (we are led to assume by the text) understood that the heating system is broken, Johnathan cannot be making a request (since it could not be satisfied). Relative to humans, LLMs are much more likely to make mistakes interpreting this scenario.

LLMs exhibit evidence consistent with a mentalizing capacity, albeit one below the level of most neurotypical adult humans. - Sean Trott

Illustrating this fact, ChatGPT-4 did very well at providing answers exhibiting the right inferences in the first two of the three dialogues between Paul and Mary above: when Mary was tired or when she expressed concerns about glass doors. This is pretty good. However, it did not identify the third implication very well, failing to appreciate that Mary likely thought Sandy was lucky to be perceived as younger than her age.11

Hallucination and conversational limitations

Hallucinations

One of the problems with LLMs is that the texts they generate are often factually incorrect. The figure below shows OpenAI’s assessment of the factual accuracy of the different GPT models compared to humans (represented by a benchmark of 100).

Gary Marcus explained this phenomenon in a recent post:

LLM "hallucinations" arise, regularly, because (a) they literally don't know the difference between truth and falsehood, (b) they don't have reliably reasoning processes to guarantee that their inferences are correct and (c) they are incapable of fact-checking their own work. - Gary Marcus

Lack of self-consciousness in writing style

Another issue with LLMs is their lack of self-consciousness when writing. ChatGPT’s style is, for instance, marked with an overuse of flowery adjectives and adverbs. John Biggs summarises the issue that way: ChatGPT “writes like a freshman college student trying to pad an essay with extraneous words.”

In addition, when writing a long text, ChatGPT seems to write in a mechanical way, using a sequence of short paragraphs with the same length. The writing seems consistent locally, but the text often lacks direction and purpose. It scores high in English proficiency but many readers may wonder: what is the point this text is trying to convey? This is likely due to the feature of the attention model (perhaps better suited for local attention) and to the lack of purpose of the LLM (it does not want to say anything but is just producing meaningful text following your prompt).

Here we face a key difference with a human being. A person has an intention to convey some specific information when communicating. This intention exists prior to the start of the utterances and they guide their generation. For an LLM, the only thing the model relies on is the user’s prompt that it will use to initiate the generation of text. In addition, in the process of communicating, a human is self-aware of the style and words used and their likely impact on understanding. Variations in paragraph length and rhythms may, for instance, help focus the attention of the reader, instead of a monotone and repetitive style.

Misplaced epistemic confidence

Finally, LLMs seem overly tuned to display confidence. Combined with their hallucination, it leads to strange interactions where an LLM says something wrong confidently, you point out the mistake, and the LLM utters confidently something else that is also wrong. Talking with LLMs is like talking with Dory the fish: they are linguistically articulate, but they can say nonsensical things in a plausible way, without any self-awareness of it, and without an ability to learn about it in later interactions.

Human beings in such situations would realise that their level of confidence may be misplaced. Caring for their credibility as a communicator they would hedge accordingly, indicating carefully their degree of certainty/uncertainty about the information they provide. This is a key aspect of the pieces of information provided by humans: they come with additional information about their likely reliability. In contrast, LLMs tend to adopt a blanket overly confident outlook that reduces the ability of the reader to appreciate the degree of confidence it can give to every piece of information.

As a consequence, reading LLM’s long-form texts comes with a very particular feeling: the uncertainty all throughout that the LLM is saying something meaningful for a good reason, or whether it is simply making things up or rambling without a clear purpose. It requires extra care, and cognitive effort, to parse LLM texts relative to those written by humans who can be trusted to maximise the relevance of their answers.

What next

The future rate of progress of LLMs is uncertain. They may hit a wall because of the large amount of energy resources required to train these models. Zuckerberg has foreseen the building of a data centre requiring the electric capacity of a nuclear power plant to run these models.

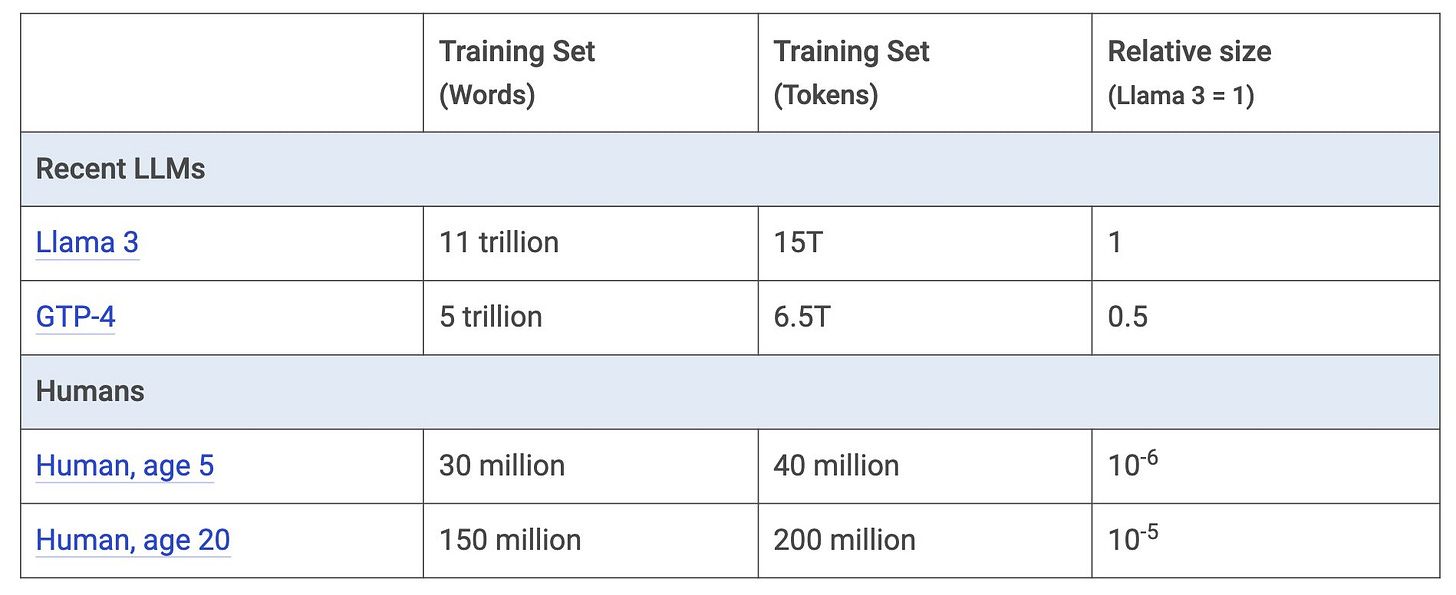

LLMs may also hit a wall because of the limited amount of textual data available to improve further model training. They already use a tremendous amount of textual data. Meta’s Llama 3’s data set contained 11 trillion words. This may already be 25% of all the textual data available for training in the world.

Finally, LLMs may experience slower progress onwards, because their architecture may limit what they can achieve, even with much more data. A recent video from Computerphile made the same argument for AI-generating images. On that point, Stefen Wolfram said the following:

Given [ChatGPT’s] dramatic—and unexpected—success, one might think that if one could just go on and “train a big enough network” one would be able to do absolutely anything with it. But it won’t work that way. Fundamental facts about computation—and notably the concept of computational irreducibility—make it clear it ultimately can’t. - Wolfram (2023)

Noticeably, since the release of ChatGPT-4, the progress in the performance of LLMs has been relatively limited and the number of monthly users has plateaued over the last year.

It does not mean that further progress in reasoning ability won’t be achievable by AI, but that it may not be delivered by just scaling up the architecture of existing LLMs. One can envisage for instance adding modules that perform the inferences humans make to generate speech.

We might be able to add modules for reasoning, iteration, add persistent and random access memories, and even provide an understanding of physical world. At this point it feels like we should get the approximation of sentience from LLMs the same way we get it from animals, but will we? It could also end up being an extremely convincing statistical model that mimics what we need while failing out of distribution. - Rohit Krishnan

It is also the take of Azeem Azhar in a recent post:

New architectural breakthroughs are likely needed to build AI systems capable of robust causal reasoning, planning and world understanding. We will need more than just data to get there. - Azeem Azhar

It is hard to anticipate whether it will come soon or not, but it is possible that we may experience a lull in the progress of performance as radically new innovations in architecture may be required to achieve a fully human-like ability to communicate (or more).

References

Bender, E.M., Gebru, T., McMillan-Major, A. and Shmitchell, S., 2021, March. On the dangers of stochastic parrots: Can language models be too big?🦜. In Proceedings of the 2021 ACM conference on fairness, accountability, and transparency (pp. 610-623).

Frieder, S., Pinchetti, L., Griffiths, R.R., Salvatori, T., Lukasiewicz, T., Petersen, P. and Berner, J., 2024. Mathematical capabilities of chatgpt. Advances in Neural Information Processing Systems, 36.

Jiang, H., 2023. A latent space theory for emergent abilities in large language models. arXiv preprint arXiv:2304.09960.

Jones, C.R., Trott, S. and Bergen, B., 2023, June. Epitome: Experimental protocol inventory for theory of mind evaluation. In First Workshop on Theory of Mind in Communicating Agents.

Kahneman, D., 2011. Thinking, fast and slow. Macmillan.

Stanovich, K.E. and West, R.F., 2000. Advancing the rationality debate. Behavioral and Brain Sciences, 23(5), pp.701-717.

Wolfram, S., 2023. What is ChatGPT doing... and why does it work?.

Yang, H., Meng, F., Lin, Z. and Zhang, M., 2023. Explaining the Complex Task Reasoning of Large Language Models with Template-Content Structure. arXiv preprint arXiv:2310.05452.

Cognitive scientists sometimes talk of generative models or mental models.

The word “predict”, commonly used in machine learning, reflects the fact that LLMs are statistical models trained to predict the next word on existing data. With a novel text, it tries to predict what would be the most likely next word, if the text was written by a human. Note that, formally, it predicts tokens that can be less than a word, but I’ll use words for simplicity.

The acronym GPT stands for Generative Pre-trained Transformer.

It is also the case that LLMs’ training data includes texts discussing such inferences explicitly. LLMs may learn to be able to make inferences about glass windows because their training data includes physics textbooks and inferences about mindreading because their training data includes textbooks in psychology.

Bender et al. (2021).

See also: https://arxiv.org/abs/2302.08399

The question originally came from a post by Tyler Cowen.

These two systems, popularised by Kahneman in his book Thinking Fast and Slow (2011) were introduced by Stanovich and West (2000).

I do not think these two systems should be seen as separated as intuitive and slow processes are likely nested in each other and not separated. Consider, for instance, solving a formal mathematical problem. It may seem part of the System 2 domain of slow and rational thinking. But typically we use our intuition to anticipate likely solutions and paths to follow to solve the problem. It is only at some choke point in the thinking process that we may need to stop and carefully implement non-intuitive formal procedures to progress towards a solution. Doing so may reveal that a path that was thought to be promising to solve the problem does not work. We then use our intuition to look for the next most promising path.

For formal arguments compatible with such a possibility, see, for instance, Jiang (2023) and Yang et al. (2023).

Here are ChatGPT4’s answers to the question “What does Mary’s answer mean in this dialogue:":

Dialogue 1: In this dialogue, Mary's response, "I am tired," typically implies that she is not interested in going to the cinema, primarily due to feeling tired. Her statement suggests that her current state of tiredness might prevent her from enjoying or even considering an activity like going to the cinema. It's a polite way of declining the invitation without explicitly saying "no."

Dialogue 2: In this dialogue, Mary's response indicates her concern about the potential hazards of glass windows that are not clearly marked or visible, which can lead people to accidentally walk into them. By saying "I am always worried about these, they can be so treacherous," she is expressing her ongoing anxiety about the safety risks associated with such windows. Her use of the word "treacherous" highlights how deceptive and dangerous she finds these situations, where clear glass can be mistaken for an open pathway.

Dialogue 3: Mary's response, "Lucky her!", in this context could be interpreted as sarcastic. It implies that Mary doesn't really consider the situation—being asked to show an ID at a bar entrance—as fortunate. Instead, she might be suggesting that it's either a trivial or annoying experience to be singled out to show identification, possibly hinting at the inconvenience or the implication of looking younger than one actually is.

Fun article and great illustrations. How does chain of thought prompting and its iterative output differ from step-wise clear thinking?

this seems factually wrong https://x.com/mark_cummins/status/1788949893903511705 I would rather agree with Yann LeCun "In 4 years, a child has seen 50 times more data than the biggest LLMs." https://www.linkedin.com/posts/yann-lecun_ive-made-that-point-before-llm-1e13-activity-7156484065603280896-QH63/